We have also understood some functions like map, flatMap, values, zipWithIndex, sorted etc.

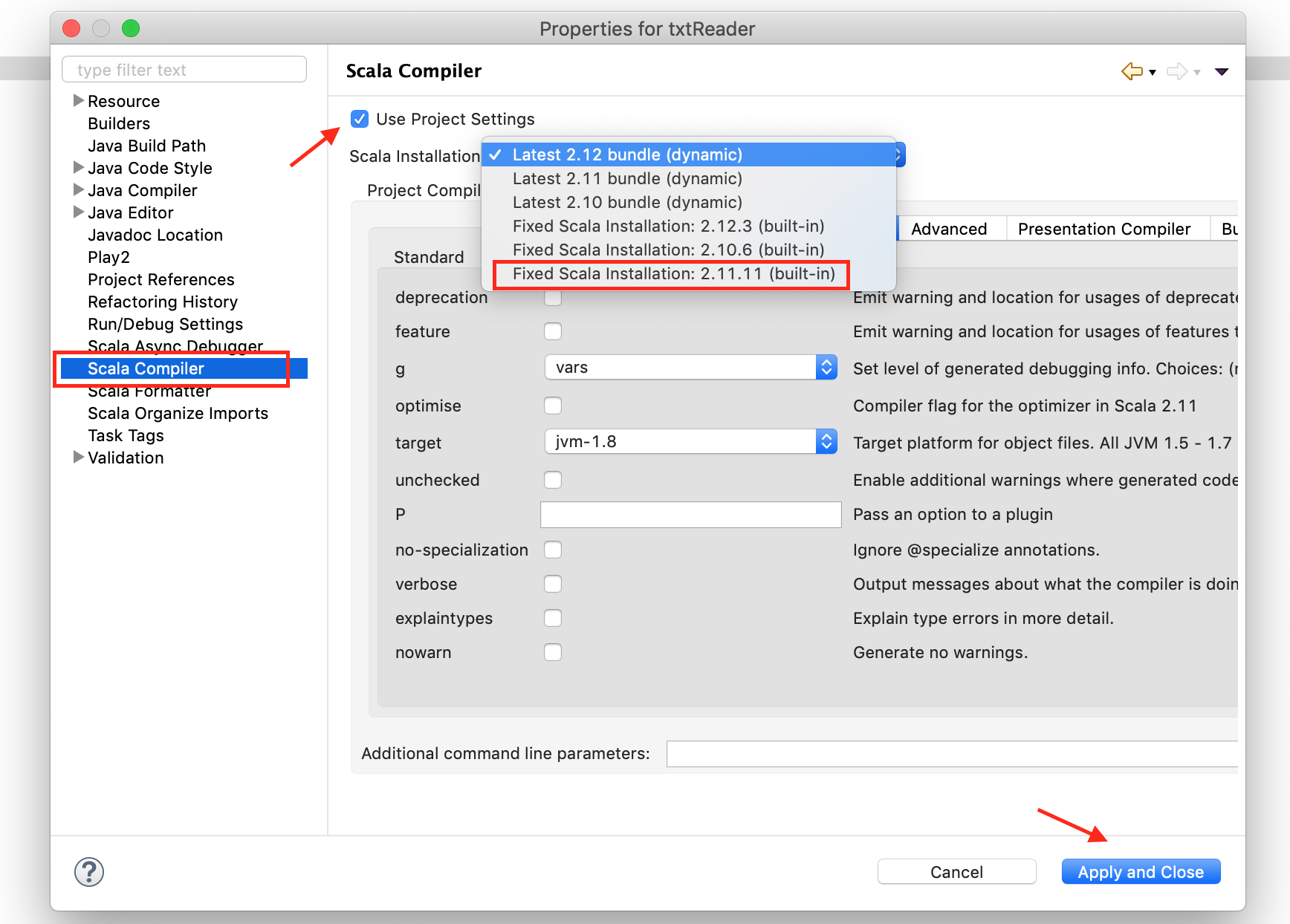

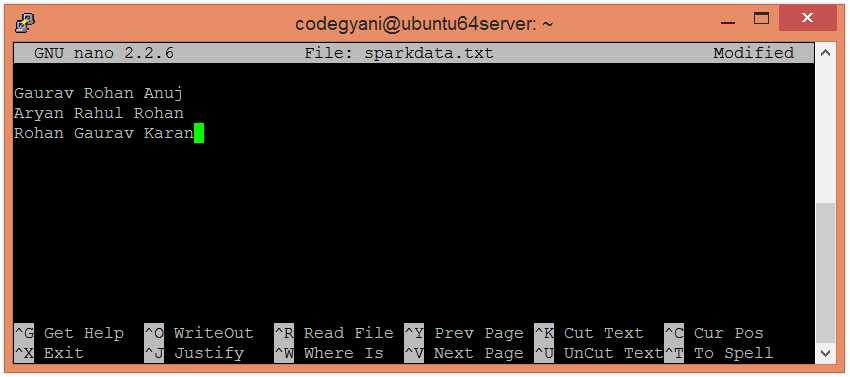

Sometimes we do get data in such a way where we would like to transpose the data after loading into Dataframe. In this post, we have seen transposing of data in a data frame. If we check the last RDD value in DF with the header, empMapRDDT4.toDF(headerList).show() These 3 steps are grouping the values based on the key(s), sorting and keeping the value at the appropriate index position. ZipWithIndex(): It will set each row with an index valueįlatMap(): It will transform each value empMapRDDT1.toDF().show()ĮmpMapRDDT2 = empMapRDDT1.map(lambda (i, j, x) : (j, (i, x))).groupByKey()ĮmpMapRDDT3 = empMapRDDT2.map(lambda (i, x) : sorted(list(x), cmp=lambda (i1,e1),(i2,e2) : cmp(i1, i2)))ĮmpMapRDDT4 = empMapRDDT3.map(lambda x : map(lambda (i, e) : e, x)) Values(): It will take map key’s value i.e. Spark can manage big data collections with a small set of high-level primitives like map, filter, groupby, and join. It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs. The main approach to work with unstructured data. Apache Spark is a fast and general-purpose cluster computing system. Second, we will explore each option with examples. First, we will provide you with a holistic view of all of them in one place. Generally speaking, Spark provides 3 main abstractions to work with it. In this step, we are converting index of the value to index of a row, index of column and value. Converting Spark RDD to DataFrame and Dataset. Let’s see this values in a structural way: empMapRDD.toDF().show() Let’s check the RDD values using below command:ĮmpRDD.take(10) Here, my source file is located in local path under /root/bdp/data and sc is Spark Context which has already been created while opening PySpark.Ĭreating a Header RDD with below columns: headerList = empRDD = sc.textFile("file:////root/bdp/spark/data/emp_data.txt") Let’s first load it into an RDD: Step 1: Load dataįirst, open the pyspark to load data into an RDD.

#Function to clean text data in spark rdd download

You can also download the file attached below: empno,ename,designation,manager,hire_date,sal,deptnoħ934,MILLER,CLERK,9782,1300.00,10 emp_data Components Involved change rows into columns and columns into rows. The requirement is to transpose the data i.e. We got the rows data into columns and columns data into rows.

Let’s take a scenario where we have already loaded data into an RDD/Dataframe.

0 kommentar(er)

0 kommentar(er)